what is a Neural Network ?

Neural networks are a fundamental concept in the field of artificial intelligence and machine learning. They are computational models inspired by the structure and functioning of biological neural networks in the human brain. Neural networks are composed of interconnected nodes, called neurons or units, organized in layers.

Here’s an introduction to neural networks:

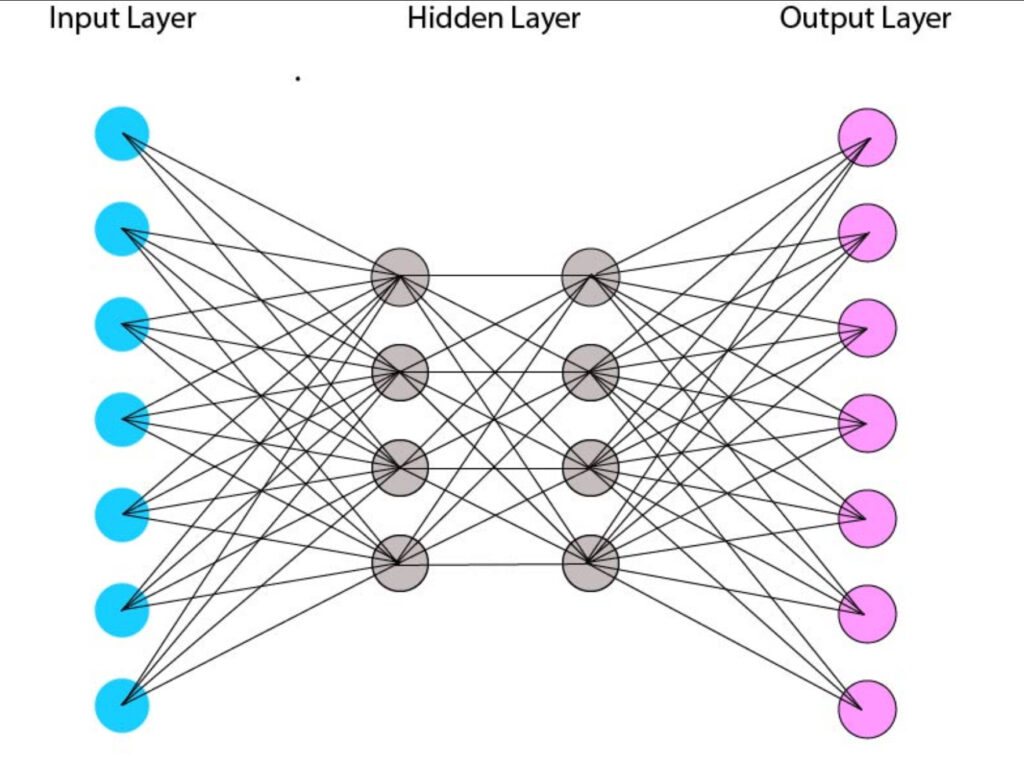

- Basic Structure: A neural network typically consists of three types of layers: input layer, hidden layers, and output layer. The input layer receives input data, which is then processed through one or more hidden layers containing neurons. Finally, the output layer produces the network’s prediction or output.

- Neurons (Nodes): Neurons are the building blocks of neural networks. Each neuron receives input signals, applies a transformation function to the inputs, and produces an output signal. Neurons in consecutive layers are connected by weighted connections, which determine the strength of the connections between neurons.

- Weights and Biases: In neural networks, connections between neurons are associated with weights, which represent the strength of the connection. During training, the network adjusts these weights based on the input data and the desired output. Additionally, each neuron typically has an associated bias, which allows the network to learn more complex patterns.

- Activation Function: The activation function of a neuron determines its output based on the weighted sum of its inputs. Common activation functions include the sigmoid, tanh (hyperbolic tangent), ReLU (Rectified Linear Unit), and softmax functions. Activation functions introduce non-linearities into the network, enabling it to learn complex patterns and relationships in the data.

- Training: Training a neural network involves presenting it with a dataset consisting of input-output pairs and adjusting the network’s weights and biases to minimize the difference between the predicted outputs and the true outputs. This process is typically done using optimization algorithms such as gradient descent and its variants, which iteratively update the network’s parameters to minimize a loss function.

- Backpropagation: Backpropagation is a key algorithm used to train neural networks. It involves computing the gradient of the loss function with respect to the network’s parameters (weights and biases) and using this gradient to update the parameters in the opposite direction of the gradient, thereby minimizing the loss function.

- Applications: Neural networks have a wide range of applications across various domains, including image recognition, natural language processing, speech recognition, recommender systems, and autonomous vehicles. They have achieved state-of-the-art performance in many tasks, thanks to their ability to learn complex patterns from large datasets.

Overall, neural networks are powerful computational models that have revolutionized machine learning and artificial intelligence. They provide a flexible framework for learning complex patterns and relationships in data, enabling the development of intelligent systems capable of performing a wide range of tasks.