Introduction

Introducing DragGAN, the latest sensation to captivate the internet following the triumph of chatbots like ChatGPT, Bard, and DALL.E (a revolutionary AI image generation tool). Developed by a collaborative team of researchers from Google, Max Planck Institute for Informatics, and MIT, DragGAN has arrived to revolutionise generative image editing.

DragGAN: Unleash Your Creative Power with Revolutionary AI Image Editing

With DragGAN, anyone can effortlessly edit images like a seasoned professional, without the need for complex and cumbersome Photo editing software. This innovative tool, driven by the power of generative AI, allows users to unleash their creativity through a simple point-and-drag interface.

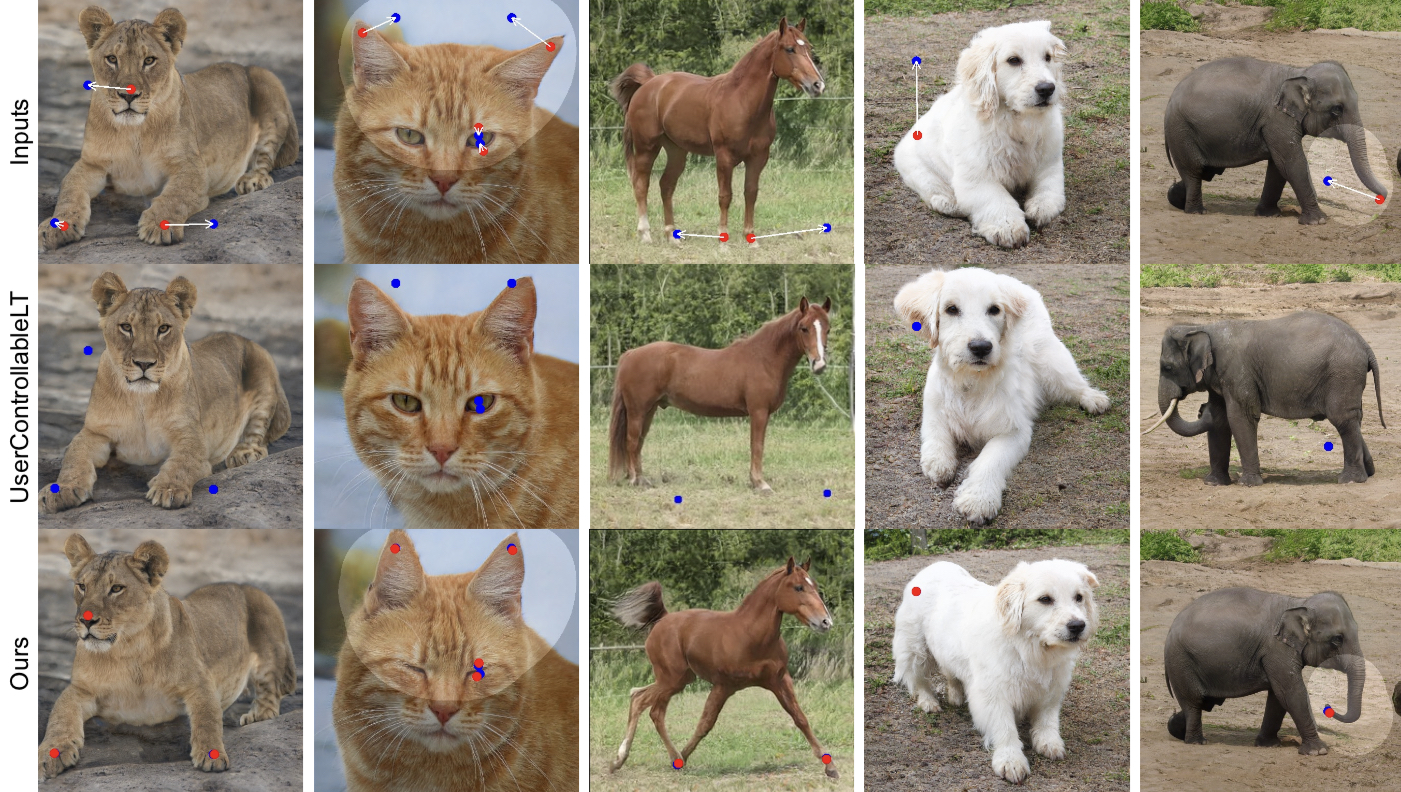

At its core, DragGAN (Interactive Point-based Manipulation on the Generative Image Manifold) harnesses the remarkable capabilities of a pre-trained GAN. By faithfully adhering to user input while maintaining the boundaries of realism, this method sets itself apart from previous approaches. Gone are the days of relying on domain-specific modeling or auxiliary networks. Instead, DragGAN introduces two groundbreaking components: a latent code optimization technique that progressively moves multiple handle points towards their intended destinations, and a precise point tracking procedure that faithfully traces the trajectory of these handle points. Leveraging the discriminative qualities found within the intermediate feature maps of the GAN, DragGAN achieves pixel-perfect image deformations with unprecedented interactive performance.

Be ready to embark on a new era of image editing as DragGAN paves the way for intuitive and powerful point-based manipulation on the generative image manifold.

Its white paper has been released and code will be made public in jun 2023.

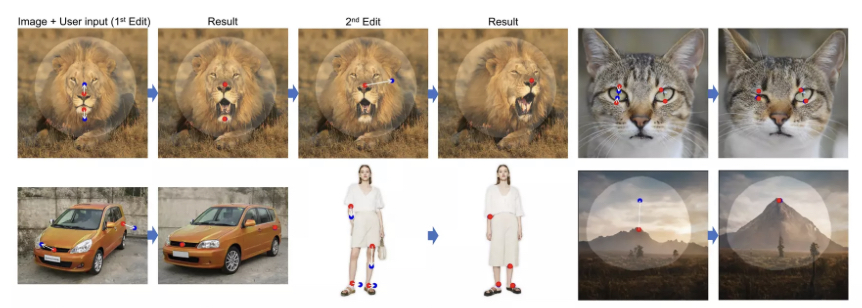

DragGAN Demo

DragGAN technique empowers users to effortlessly manipulate the content of GAN-generated images. With just a few clicks on the image, utilising handle points (highlighted in red) and target points (highlighted in blue), our approach precisely moves the handle points to align with their corresponding target points. For added flexibility, users can draw a mask to define the adaptable region (indicated by a brighter area), while keeping the remainder of the image unchanged. This point-based manipulation provides users with unparalleled control over various spatial attributes, including pose, shape, expression, and layout, spanning a wide range of object categories.

Leave a Reply